Team Lu Baoliang of Shanghai Jiaotong University published the latest research results at the machine learning summit ICLR 2024.

Recently, The 12th International Conference on Learning Presentations (ICLR 2024), one of the top conferences in the field of international machine learning, announced the paper recruitment list. Professor Lu Baoliang from the Department of Computer Science and Engineering of School of Electronic Information and Electrical Engineering, Shanghai Jiaotong University, together with Shanghai Zero Unique Technology Co., Ltd., has been selected as the ICLR 2024 Spotlight paper "Large Brain Model for Learning Generic Representations with Tremendorus EEG Data in BCI". There are 7,262 papers submitted worldwide, with an overall acceptance rate of about 31%, of which 5% were selected as Spotlight papers.

Research background

At present, deep learning models based on electroencephalogram (EEG) signals are usually designed for specific data sets or brain-computer interface (BCI) application tasks, which limits the scale of the model and fails to give full play to the representation ability of EEG signals. Recently, Large Language Model (LLM) has made epoch-making progress in text processing. Under this background, Professor Lu Baoliang’s team started to study large-scale EEG model (LEM). Although they are in different fields, they have something in common in the task of data decoding. Researchers use the successful concept of text processing for reference to explore new methods for analyzing EEG signals and building brain-computer interface models, and expand the scientific research vision and application prospects in this field.

However, compared with text data, EEG data sets are usually very small in scale and different in format. The development of large-scale EEG models faces the following challenges:

1) Lack of sufficient EEG data

Compared with natural language and image data, it is extremely difficult to collect large-scale EEG data. In addition, the annotation of EEG data usually requires a lot of energy from domain experts, resulting in only a small number of labeled data sets can be used for specific tasks in BCI, and the EEG signals of these tasks are usually collected from a few subjects, and the duration is usually less than tens of hours. Therefore, there is not a large enough EEG data set to support the training of LEM.

2) Diversified configuration of 2)EEG signal acquisition.

Although there is an international 10-20 system to ensure the standardization of EEG signal acquisition, users can still choose to use EEG caps with different electrode numbers or patch electrodes to collect EEG data according to actual application needs. Therefore, how to process EEG data in different formats to match the input unit of neural Transformer is still a problem to be explored.

3) Lack of effective learning paradigm of EEG representation.

The low signal-to-noise ratio (SNR) of EEG data and different types of noise are very difficult problems. In addition, balancing temporal and spatial features is very important for effective EEG representation learning. Although there are various EEG representation learning paradigms based on deep learning (such as CNN, RNN and GNN) that can be used to process the original EEG data, many researchers still tend to design artificial EEG features because of the above problems.

research results

The goal of this paper is to design a general large-scale EEG model called LaBraM. The model can effectively process various EEG data with different channels and lengths. Through unsupervised training of a large number of EEG data, the research team envisages that the model will have universal EEG representation ability, so that it can quickly adapt to various downstream tasks of EEG. In order to train LaBraM, researchers collected more than 2,500 hours of EEG data of various tasks and formats from 20 public EEG data sets.

Firstly, the original EEG signal is divided into EEG signal channel segments to solve the problem of different electrodes and time lengths. Neural markers with rich semantics are trained by vector quantization neural spectrum prediction to generate neural vocabulary. Specifically, the marker is trained by predicting the Fourier spectrum of the original signal. During the pre-training, some EEG segments will be masked, and the goal of neural Transformer is to predict the masked marks from the visible segments. The research team pre-trained three models with different parameter sizes, 5.8 million, 46 million and 369 million, which is by far the largest model in BCI field. Subsequently, the research team fine-tuned them on four different types of downstream tasks, including classification and regression.

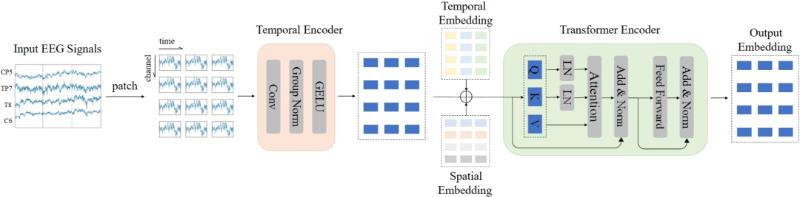

Fig. 1 overall architecture of lab ram. First, all the input EEG signals will be divided into EEG signal segments through a fixed-length time window, and then the time characteristics will be extracted by applying a time encoder to each segment. Then, temporal and spatial embedding is added to segment features to carry temporal and spatial information. Finally, the embedded sequence is transmitted to the Transformer encoder in fragment order to obtain the final output.

In this paper, neural Transformer is introduced, which is a general architecture for decoding EEG signals, and can process any input EEG signals with any number of channels and length of time, as shown in Figure 1. The key operation to achieve this goal is to divide EEG signals into blocks, which is inspired by fragment embedding in images. Because of the high resolution of EEG in time domain, it is very important to extract time features before segment interaction through self-attention. A time domain encoder composed of multiple time domain convolution blocks is used to encode each EEG segment into segments for embedding. Temporal convolution block consists of one-dimensional convolution layer, group normalization layer and GELU activation function. In order to make the model aware of the temporal and spatial information of fragment embedding, the researchers initialized a temporal embedding list and a spatial embedding list. For any fragment, the corresponding temporal embedding and spatial embedding are added to the fragment embedding. Finally, the embedded sequence will be directly input into the Transformer encoder.

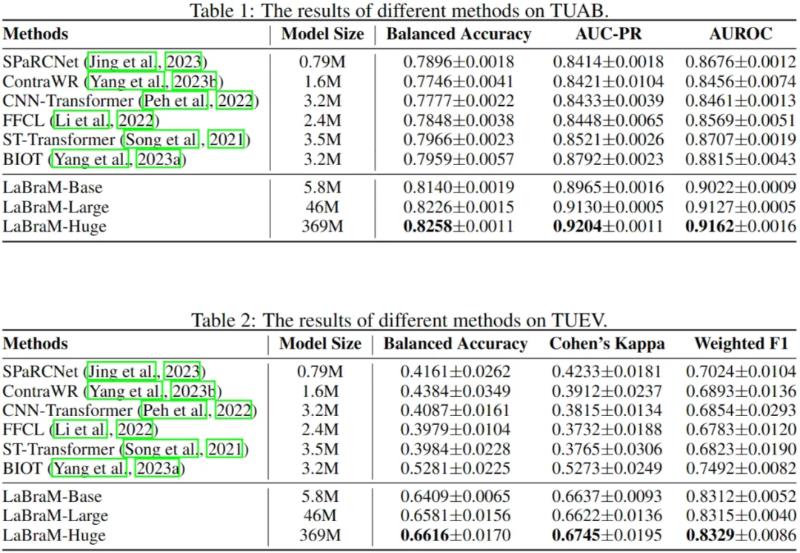

This paper verifies the effectiveness of LaBraM on two downstream task datasets: TUAB and TUEV. We designed three different configurations of LaBraM: LaBraM-Base, LaBraM-Large and LaBraM-Huge. The parameters of LaBraM-Base are 5.8M, LaBraM-Large is 46M and LaBraM-Huge is 369M.

Tables 1 and 2 above list the best baseline results of TUAB and TUEV and the results of LaBraM. Obviously, LaBraM-Base model is superior to all baseline models in various evaluation indexes of these two tasks. Especially in the more challenging TUEV multi-class classification task, the model has achieved significant performance improvement. It is observed that with the increase of model parameters, LaBraM-Huge model performs best, followed by LaBraM-Large model, and finally LaBraM-Base model. The research team believes that this good performance is attributed to the increase of pre-training data and model parameters. The paper concludes that as long as there is enough EEG data, large-scale EEG models can learn more general EEG representations, thus improving the performance of EEG signals in various downstream tasks.

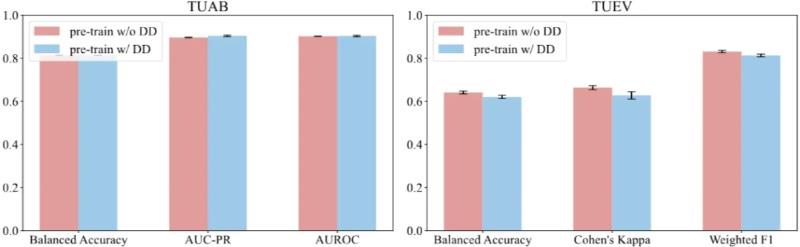

Figure 2 compares the performance of the model on TUAB and TUEV data sets, and whether it is included in the pre-training process.

In the pre-training process, the research team hopes that the model can learn the general EEG representation that is not specific to any specific task. Although tag data is not used in the pre-training, in order to eliminate the influence of pre-training data on downstream tasks, this paper compares whether the downstream task data set is included in the pre-training results. It is worth noting that the records of TUAB and TUEV do not intersect with the records of pre-training data sets. As shown in Figure 2, whether the downstream task data set is included in the pre-training process of the model has little influence on the performance of the model on downstream tasks. This shows that the model has the ability to learn general EEG representation, and provides guidance for collecting more EEG data in the future, that is, researchers do not need to spend a lot of energy labeling EEG data in the pre-training process.

Author information

Jiang Weibang, a Ph.D. student in the Department of Computer Science and Engineering, School of Electronic Information and Electrical Engineering, Shanghai Jiaotong University, is the first author of this paper, and Dr. Zhao Liming from Shanghai Zero Unique Technology Co., Ltd. and Professor Lu Baoliang from the Department of Computer Science, Shanghai Jiaotong University are co-authors of this paper.

Lv Baoliang, Professor of Computer Science and Engineering Department of Shanghai Jiaotong University, Professor Guangci of Ruijin Hospital affiliated to Shanghai Jiaotong University School of Medicine, doctoral supervisor, IEEE Fellow. He is currently the director of Shanghai Key Laboratory of Intelligent Interaction and Cognitive Engineering of Shanghai Jiaotong University, the executive dean of Qingyuan Research Institute of Shanghai Jiaotong University, the co-director of Brain-Computer Interface and Neural Regulation Center of Ruijin Hospital affiliated to Shanghai Jiaotong University School of Medicine, the director of the joint laboratory of encephalopathy center-Mihayou of Ruijin Hospital affiliated to Shanghai Jiaotong University School of Medicine, and the founder and chief scientist of Shanghai Zero Unique Technology Co., Ltd. He is the editorial board member of IEEE Transactions on Affective Computing, Journal of Neural Engineering, IEEE Transactions on Cognitive and Developmental Systems, Pattern Recognition and Artificial Intelligence and Journal of intelligence science and technology.Won the 2022 Asia-Pacific Neural Network Society Outstanding Achievement Award, ACMM 2022 Top Paper Award, and 2021 IEEE Transactions on Affective Computing Best Paper Award. The first prize of Wu Wenjun Artificial Intelligence Natural Science in 2020 and the Best Paper Award of IEEE Transactions on Autonomous Mental Development in 2018 were selected into Elsevier’s list of highly cited scholars in China in 2020, 2021 and 2022. The main research fields include the theory and model of bionic computing, deep learning, emotional intelligence, emotional brain-computer interface and its application in the diagnosis and treatment of emotional disorders.

Paper link:https://openreview.net/forum? id=QzTpTRVtrP